Algorithms of Oppression by Safiya Umoja Noble explores how search engines perpetuate societal biases, reflecting and reinforcing racism and sexism. This groundbreaking work examines the intersection of technology and systemic oppression, uncovering how algorithmic decisions disproportionately impact marginalized communities. By analyzing search results and their implications, Noble sheds light on the urgent need for ethical AI and inclusive digital practices. The book serves as a critical call to action, urging tech companies to address biases embedded in their systems. Through compelling case studies and sharp analysis, Noble challenges readers to rethink the role of technology in perpetuating inequality.

Overview of the Book and Its Significance

Algorithms of Oppression by Safiya Umoja Noble is a critical examination of how search engines like Google perpetuate systemic racism and sexism. The book highlights how algorithmic bias in search results reinforces harmful stereotypes, particularly against Black women and other marginalized groups. Noble’s work is significant as it bridges the gap between technology and social justice, offering a multidimensional analysis of how search technologies embed and amplify oppressive power structures. By exposing the ways in which algorithms replicate historical inequalities, the book calls for a more inclusive and equitable digital landscape. Its insights have sparked crucial conversations about the ethical responsibilities of tech companies and the need for transparency in algorithmic decision-making.

The Core Argument of Safiya Umoja Noble

Noble argues that search engines like Google are not neutral but perpetuate racism and sexism, reinforcing harmful stereotypes and marginalizing communities through biased algorithmic results.

Challenging the Neutrality of Search Engines

Noble challenges the notion that search engines like Google are neutral platforms, arguing that they perpetuate systemic biases. By examining how algorithms prioritize certain results over others, she reveals that these systems often amplify stereotypes and exclude marginalized voices. For instance, searches for terms like “black girls” frequently yield sexually explicit content, highlighting how algorithmic decisions can perpetuate racism and sexism. This bias is not accidental but rooted in the historical and societal contexts in which these technologies are developed and deployed. Consequently, search engines become tools of oppression, reinforcing existing power dynamics rather than challenging them.

Case Studies: Examples of Algorithmic Bias

A key case study in Algorithms of Oppression is the search for “black girls,” which often yields sexually explicit or stereotypical results, highlighting algorithmic bias. Noble’s analysis reveals how such outcomes perpetuate harmful stereotypes and systemic oppression, emphasizing the need for critical examination of technological neutrality.

The “Black Girls” Search and Its Implications

The search query “black girls” often returns sexually explicit or stereotypical content, as documented in Algorithms of Oppression. This troubling outcome underscores how search algorithms perpetuate racial and gender biases, marginalizing Black women and girls. Such results not only reflect societal prejudices but also reinforce them, contributing to systemic oppression. Noble argues that these biased outcomes are not accidental but are shaped by historical and contemporary power dynamics embedded in technology. The implications are profound, highlighting the urgent need for accountability and ethical reform in algorithmic systems.

Understanding Algorithmic Oppression

Algorithmic oppression arises from human-created algorithms embedding biases, leading to systemic discrimination and reinforcing societal inequalities. Tech companies perpetuate these biases, deeply impacting marginalized communities.

How Search Engines Reinforce Racism and Sexism

Safiya Umoja Noble argues that search engines like Google perpetuate racism and sexism by prioritizing harmful stereotypes and marginalizing oppressed groups. For instance, searches for “black girls” often yield sexually explicit content, reflecting systemic biases embedded in algorithmic logic. These biases, rooted in historical and societal inequalities, are amplified by search engines, creating a cycle of oppression. The profit-driven nature of tech companies further exacerbates these issues, as they prioritize engagement over ethical considerations. This perpetuates harmful stereotypes, reinforces systemic racism, and limits opportunities for marginalized communities to be represented fairly online.

The Role of Tech Companies in Perpetuating Bias

Tech companies like Google embed and amplify systemic biases through their algorithms, prioritizing profit over equity and perpetuating oppressive power dynamics in search results.

Google and the Power Dynamics of Search Results

Google’s algorithms play a pivotal role in reinforcing systemic inequalities by prioritizing certain content over others, often marginalizing oppressed groups. Safiya Umoja Noble’s examination reveals how search results for terms like “black girls” initially yielded sexually explicit content, perpetuating harmful stereotypes. This bias reflects broader societal inequities embedded in algorithmic systems. Google’s dominance in the search market amplifies these issues, affecting public perception and access to information. The case underscores the urgent need for transparency and accountability in algorithmic decision-making to mitigate such biases and promote equitable representation in digital spaces.

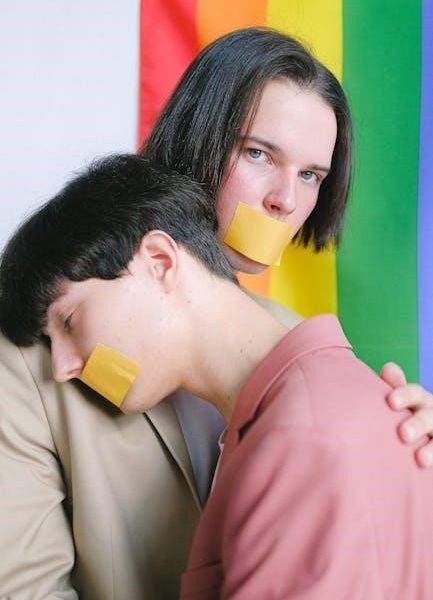

Societal Implications of Algorithmic Bias

Algorithmic bias perpetuates systemic inequalities, disproportionately affecting marginalized communities by limiting opportunities and reinforcing negative stereotypes, as highlighted in Algorithms of Oppression.

Impact on Marginalized Communities

Marginalized communities face amplified harm due to algorithmic bias, as search engines often prioritize stereotypes over accurate representations. For instance, searches for “black girls” frequently yield sexually explicit content, perpetuating harmful narratives. This reinforces systemic racism and sexism, limiting opportunities and visibility for women of color. The suppression of diverse perspectives in search results further marginalizes these groups, making it harder for them to challenge oppressive stereotypes. Additionally, algorithmic bias in platforms like TikTok creates echo chambers that amplify prejudiced views, deepening societal divides. Such biases not only reflect existing power imbalances but also exacerbate them, making digital spaces hostile for marginalized individuals.

Solutions and Recommendations

Noble advocates for empowering marginalized groups in algorithmic decision-making, promoting inclusive design practices, and fostering accountability to address biases. This ensures fair digital spaces for all.

Empowering Oppressed Groups in Algorithmic Decision-Making

Empowering marginalized communities involves actively involving them in the design and auditing of algorithms to ensure fairness. Noble emphasizes the need for structural changes within tech companies, such as diversifying development teams and incorporating diverse perspectives. By decentralizing power, oppressed groups can reclaim agency over their digital representation. This approach fosters inclusive technologies that challenge systemic biases. Transparency and accountability in algorithmic processes are also crucial, enabling marginalized voices to redefine their digital narratives and counter oppressive frameworks. Ultimately, empowering these groups is essential for creating equitable digital ecosystems that reflect and respect all identities.

Critical Reception and Academic Response

Scholars and activists have praised Algorithms of Oppression, citing its critical analysis of search engine biases and its impactful contribution to understanding racism in digital spaces.

How Scholars and Activists Have Received the Book

Algorithms of Oppression has been widely praised by scholars and activists for its insightful critique of algorithmic bias and its societal implications. Many have highlighted the book’s ability to bridge academic and public discourse, making it accessible to a broad audience. Activists particularly appreciate its emphasis on the need for ethical tech practices and its call for marginalized communities to have a greater role in shaping technology. The work has also been recognized for its interdisciplinary approach, combining sociology, technology studies, and critical race theory to provide a comprehensive analysis of digital oppression.

Broader Implications for Digital Justice

The book underscores the need for ethical AI and inclusive technologies to ensure digital justice. Addressing algorithmic oppression is crucial for fostering fairness and representation in society.

The Need for Ethical AI and Inclusive Technologies

The development of ethical AI and inclusive technologies is essential to combat algorithmic oppression. By prioritizing diversity in design teams and incorporating diverse datasets, tech companies can reduce biases. Ethical frameworks must be implemented to ensure AI systems serve all communities fairly. Inclusive technologies empower marginalized groups, fostering equity in digital spaces. Safiya Noble emphasizes that ethical AI is not just a technical issue but a societal one, requiring collaboration across industries to create systems that promote justice and equality for all.

Safiya Umoja Noble’s work is a call to action, urging the dismantling of algorithmic biases and the creation of ethical AI for a fair digital future.

A Call to Action for a Fairer Digital Future

Noble’s work emphasizes the need for ethical AI and inclusive technologies to combat algorithmic oppression. By prioritizing transparency and accountability, tech companies can empower marginalized communities.

Advocating for diverse representation in algorithmic decision-making ensures fairer outcomes. This call to action urges collective responsibility to dismantle biases and create a digital landscape that promotes equity for all.